At last week’s Internet Identity Workshop in Mountain View, California, I led a brainstorming session to identify risks to the success of the new National Strategy for Trusted Identities in Cyberspace (NSTIC, pronounced “EN-stick”). The strategy is to encourage many non-government organizations to provide digital identity and personal data services in a way that meets the needs of individuals, identity providers (“yes, person is who she claims to be”), attribute providers (“she is registered at our school”), and those who rely on digital identity. What could go wrong with a project like this? What can be done to avoid these threats and risks? To mitigate them when they show up? Meeting notes…

At last week’s Internet Identity Workshop in Mountain View, California, I led a brainstorming session to identify risks to the success of the new National Strategy for Trusted Identities in Cyberspace (NSTIC, pronounced “EN-stick”). The strategy is to encourage many non-government organizations to provide digital identity and personal data services in a way that meets the needs of individuals, identity providers (“yes, person is who she claims to be”), attribute providers (“she is registered at our school”), and those who rely on digital identity. What could go wrong with a project like this? What can be done to avoid these threats and risks? To mitigate them when they show up? Meeting notes…

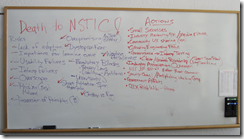

Risks

Lack of adoption. NSTIC relies on the private sector to invest and build identity infrastructure. There’s a real chance that this could happen slowly or unevenly. We’ve seen great technologies wither when they don’t reach critical mass.

Impatience for learning curve. We learn by doing and we learn more from problems and failures. NSTIC as a whole could be unfairly discredited after some projects or products fail for technical or business reasons.

Usability failures. Great products fail on even minor user experience defects. We know very little about what great user experience looks like for this next generation of personal data control.

Interop failures. Systems that should work together in theory may not in practice. Sometimes this is technical philosophy, ego, fuzzy specs, culture gaps, or regulatory differences, and local optimization.

Overscope. We’ve all seen protocols that work at first, become burdened as new features pile on, and lose their clarity and momentum as a result.

Phishing and Malware ($). We know that bad actors will follow the money, as they have from email to search to social media. Stakeholders could lose faith and abandon trust frameworks.

Perversion of principles ($). NSTIC lists core principles. Those principles will be eroded, if not attacked, unless monitored and defended.

Overpromising (by Tech to Policy). Silicon Valley has a tendency to tell Washington that technology offers silver bullets to huge problems.

Dystopian fear. Maybe your government really is out to get you, but dark overwhelming fears could slow or stop the project.

Waiting for winners. One strategy for risk is to wait for a handful of leaders to emerge before joining. This stifles investment, experimentation, and deep learning. The ecosystem needs pioneers and early adopters before winners can emerge.

Regulatory blocks. National and local laws and regulations may interfere with the ability of the ecosystem to grow. Privacy laws, liability, and antitrust rules could stall engineering, investment and adoption.

Uncertainty over liability. However the ecosystem works out liability, uncertainty about the resolution threatens investment.

Short attention span. This is not a weekend project. Will the ecosystem persist until it becomes mainstream?

Hype cycle. Many technologies don’t survive the hype cycle’s peak of inflated expectations or the trough of disillusionment.

Chicken vs. Egg. The system needs governance, startups, large corporate and government users, and the public to buy in. Nobody wants to jump in first.

Prevention and Mitigation Actions

Highlight small successes. We should celebrate small, incremental successes more than big-bang moments. Don’t oversell or overpromise.

Industry Marketing and PR. The ecosystem needs its own media and voice to respond to concerns, to put forth a common vision, to reach out to newbies and decision makers, to evangelize the benefits of the approach.

Share community UX experience. We could plan and pool knowledge and experiments as a community of practice. Where a Google might not be able to try different login UIs , variation being perceived as phishing, smaller companies can experiment and share results, leading to convergence and adoption.

Cultivate Engineering Focus. Keeping designers and engineers focused on current release cycles helps a developer community avoid feature creep and intriguing digressions.

Foster Interop Testing. Other industries develop standards for testing interop, hold interop workshops and set up backchannels for feedback. We just need a convening body to coordinate tests.

Formulate, publish and update a Clear/Graded Roadmap. Short term plans with long term visions. Plans to reach specific business and technology milestones. Long term visions for where those protocols and practices should go. Clearly communicated and widely agreed upon so the industry avoids forking, surprises, and hype.

Industry association outreach. The NSTIC strategy depends on achieving a critical mass of adoption within various communities. Many are represented by industry associations or professional organizations. Outreach services could provide education, evangelism, engagement and help with rough spots in adoption.

Recruit legacy identity authentication communities. NSTIC is not the first attempt to solve these problems. A look at NIST SP 800-63 shows existing identity and security standards have communities of their own, including tens of thousands of implementers. Outreach can smooth the way for education and adoption.

Security Council. It’s not too early to start a security conversation within the NSTIC ecosystem. At a minimum, we could start a working group to prepare for the first wave of phishing and identity theft.

Government Affairs. Governments are huge stakeholders in NSTIC. Not just US federal government agencies but US state and local governments. There is every reason to expect this program to be transnational so governments around the world are also stakeholders. They will want to understand the policy implications of the rapidly changing technologies, and the effect rules, regulations, directives and laws will have on the ecosystem. Effective communication and advocacy on behalf of the industry, especially for the many small startups and the interests of individuals who lack a voice, could keep government perspectives and actions cordial and supportive.

OIX Risk Wiki. OIX has an active security thread on its wiki.

Risk, Response, Community and PDEC

So this brings to mind three roles for PDEC, the Personal Data Ecosystem Consortium: Communicate, Convene, and Community.

Many of these responses involve marketing communication functions on behalf of the community’s stakeholders. As listed above, industry marketing and public relations, government affairs, highlighting small accomplishments, publishing a roadmap, are the kinds of things a consortium can do well. Speakers bureau, anyone?

Similarly, bringing people together to talk and work is another role for PDEC. We can serve some of our outreach goals by inviting people and organizations to join in projects and conversations. For example we could invite identity providers and relying parties to interop workshops. We might host security roundtables and mailing lists.

Last, today’s identity ecosystem doesn’t have a real voice for individuals, a way for people to talk about this topic. PDEC might offer community services to help people talk to each other, with the industry, and with other stakeholders.

The “Death to NSTIC!” motto, all in fun, reminds us bad things happen and preparedness is part of planning.

I want to thank the IIW folks who crowded into room E for their work, as reported here. I also want to thank the Identity Commons for creating an environment where IIW and PDEC can emerge.